Just gotten a new PC to play Black Myth Wukong. Thought that it might be interesting to see if I can setup the identical setup of comfyUI + flux on the PC and compares that to Mac.

Step 1:

There are a couple of ways to install comfyUI, more instruction here. The simple approach is to download the standalone version that is already optimized for NVidia. Get the download package here. You will need the archival software 7zip to decompress the file.

Decompress the file and place it at a location where you like on your PC.

Step 2:

You will need the Flux AI model to work with comfyUI. To use the Flux1 dev FP8 Download the model here. To use the bigger Flux1 Dev model Download it here. Do take note that you will need 16GB of VRAM on your NVidia GPU. Choose a smaller model if you don’t have 16GB RAM or more.You can get the Flux1 dev NF4 model here. Move the downloaded Flux AI model file e.g. flux1-dev-for.safetensors into comfyUI, put it under “ComfyUI/models/unet”. This is a huge file, do ensure that you have the necessary storage space.

You will also need the VAE file, Download it here and save it into the “ComfyUI/models/vae” folder. The file is call ae.safetensors.

Next you need to download the Clip model from here and put it under “ComfyUI/models/clip” folder. The files are:

clip_l.safetensors, t5xxl_fp16.safetensors and

t5xxl_fp8_e4m3fn.safetensors

Step 3:

You will need python install on your PC. Because I also develop other projects with python, I prefers to use anaconda to manage the various pythons versions and dependency libraries separately. Download and install anaconda here.

Once anaconda is installed, I will create a new conda environment for ComfyUI.

conda create -n comfyUI python=3.9

Install the dependencies for ComfyUI. Locate the requirement.txt file under ComfyUI_windows_portable\ComfyUI

pip install -r requirements.txtThis will install all the dependencies that ComfyUI requires. Remember to activate your conda environment before you execute the command above. If otherwise you will install the dependencies files into your system python environment instead of the conda environment that you just created.

Step 4:

Now execute the following command to invoke the comfyUI process, you will access the comfyUI via browser. At the end of the command, you will see something like “To see the GUI go to: http://127.0.0.1:8188”

run_nvidia_gpu.bat If you are on Mac and not using the portable version of comfyUI, start with python main.py.

Note:

If you are not using python=3.9 example 3.12 you might get Error loading fbgemm.dll error.

Ensuring that PyTorch uses Cuda

Nvidia users should install stable pytorch using this command:

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu124

This is the command to install pytorch nightly instead which might have performance improvements:

pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu124

Other Reference: https://www.geeksforgeeks.org/how-to-use-gpu-acceleration-in-pytorch/

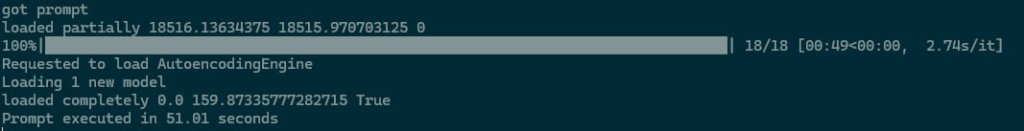

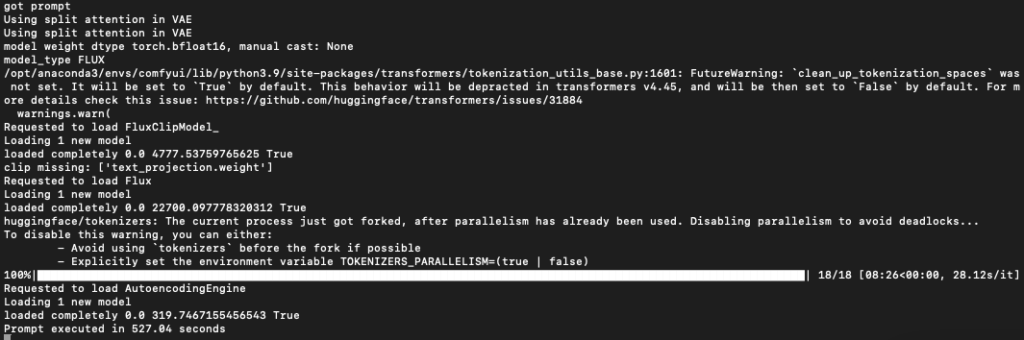

Performance of image generation comparing that to Mac

Using the same prompt and settings, 4090 (24MB VRRAM, 64GB RAM) with GPU acceleration is much faster than that of Mac M2 Ultra with 192MB RAM.

Note: The PyTorch on Mac already using mps